Various computer scientists, researchers, lawyers and other techies have recently been attending bi-monthly meetings in Montreal to discuss life's big questions -- as they relate to our increasingly intelligent machines.

Should a computer give medical advice? Is it acceptable for the legal system to use algorithms in order to decide whether convicts get paroled? Can an artificial agent that spouts racial slurs be held culpable?

And perhaps most pressing for many people: Are Facebook and other social media applications capable of knowing when a user is depressed or suffering a manic episode -- and are these people being targeted with online advertisements in order to exploit them at their most vulnerable?

Google, Microsoft, Facebook and recently even the Royal Bank of Canada have announced millions of dollars in investment in artificial intelligence labs across Montreal, helping to make the city a world leader in machine-learning development.

As a consequence, researchers such as Abhishek Gupta are trying to help Montreal lead the world in ensuring AI is developed responsibly.

"The spotlight of the world is on (Montreal)," said Gupta, an AI ethics researcher at McGill University who is also a software developer in cybersecurity at Ericsson.

His bi-monthly "AI ethics meet-up" brings together people from around the city who want to influence the way researchers are thinking about machine-learning.

"In the past two months we've had six new AI labs open in Montreal," Gupta said. "It makes complete sense we would also be the ones who would help guide the discussion on how to do it ethically."

In November, Gupta and Universite de Montreal researchers helped create the Montreal Declaration for a Responsible Development of Artificial Intelligence, which is a series of principles seeking to guide the evolution of AI in the city and across the planet.

The declaration is meant to be a collaborative project and its creators are accepting comments and ideas over the next several months on how to fine-tune the document and share it with computer scientists working internationally on machine-learning.

Its principles are broken down into seven themes: well-being, autonomy, justice, privacy, knowledge, democracy and responsibility.

During a recent ethics meet-up, Gupta and about 20 other people talked about justice and privacy.

Lawyers, business people, researchers and others discussed issues such as whether to fight against the fact so much power and wealth are concentrated in the hands of a handful of AI companies.

"How do we ensure that the benefits of AI are available to everyone?" Gupta asked his group. "What types of legal decisions can we delegate to AI?"

Doina Precup, a McGill University computer science professor and the Montreal head of DeepMind, a famous U.K.-based AI company, says it isn't a coincidence Quebec's metropolis is trying to take the lead on AI ethics.

She said the global industry is starting to be preoccupied with the societal consequences of machine-learning, and Canadian values encourage the discussion.

"Montreal is a little ahead because we are in Canada," Precup said. "Canada, compared to other parts of the world, has a different set of values that are more oriented towards ensuring everybody's wellness. The background and culture of the country and the city matter a lot."

AI is everywhere, from the algorithms that help us read weather patterns or that filter news on our Facebook feeds, to autonomous weapon systems.

A major ethical quandary most people will soon have to deal with involves self-driving cars.

Cars are a classic example, Gupta explains. For instance, he asks, should car companies produce autonomous vehicles that are programed to maximize driver safety or pedestrian safety?

"What if you're in the car and the pedestrian is your child?" Gupta asks.

Nick Bostrom, a Swedish philosopher and one of the world's pre-eminent thinkers on AI, said "it's quite amazing how central a role Canada has played in creating this deep-learning revolution."

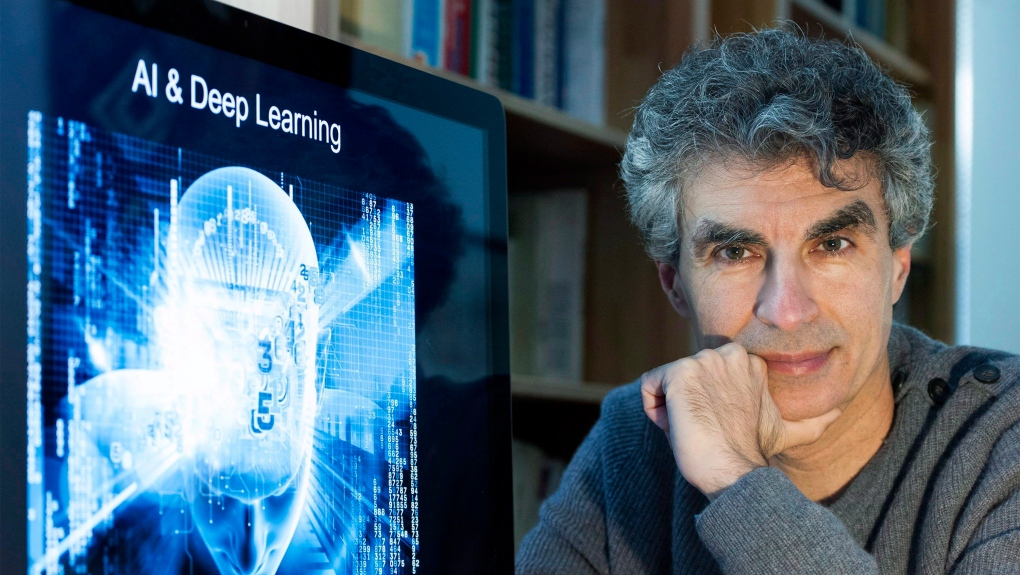

While many of the AI field's pioneers in the United States and elsewhere have left academia and entered the business world, Canada's leaders, such as Universite de Montreal's Yoshua Bengio, have had different priorities, Bostrom said in an interview from Britain.

"Bengio is still teaching students and nurturing the next generation of research talent -- he's also been relatively involved in trying to think about the ethical dimensions of this," said the author of a seminal book on AI, "Superintelligence," published in 2014.

Bostrom said there is a role for the international AI research community to reflect and try to develop a "shared sense of norms and purpose that can be influential" because it will put pressure on companies to hire people who care about developing machine-learning responsibly.

"If you are a corporation that wants to be at the forefront you need to hire the very top talent -- and they have many options available to them," Bostrom said.

"If your corporation is seen as running roughshod over this shared sense of responsibility you are going to find it harder to get the very top people to work with you."